After Teen Suicide, Character.AI Lawsuit Raises Questions Over Free Speech Protections

After Teen Suicide, Character.AI Lawsuit Raises Questions Over Free Speech Protections

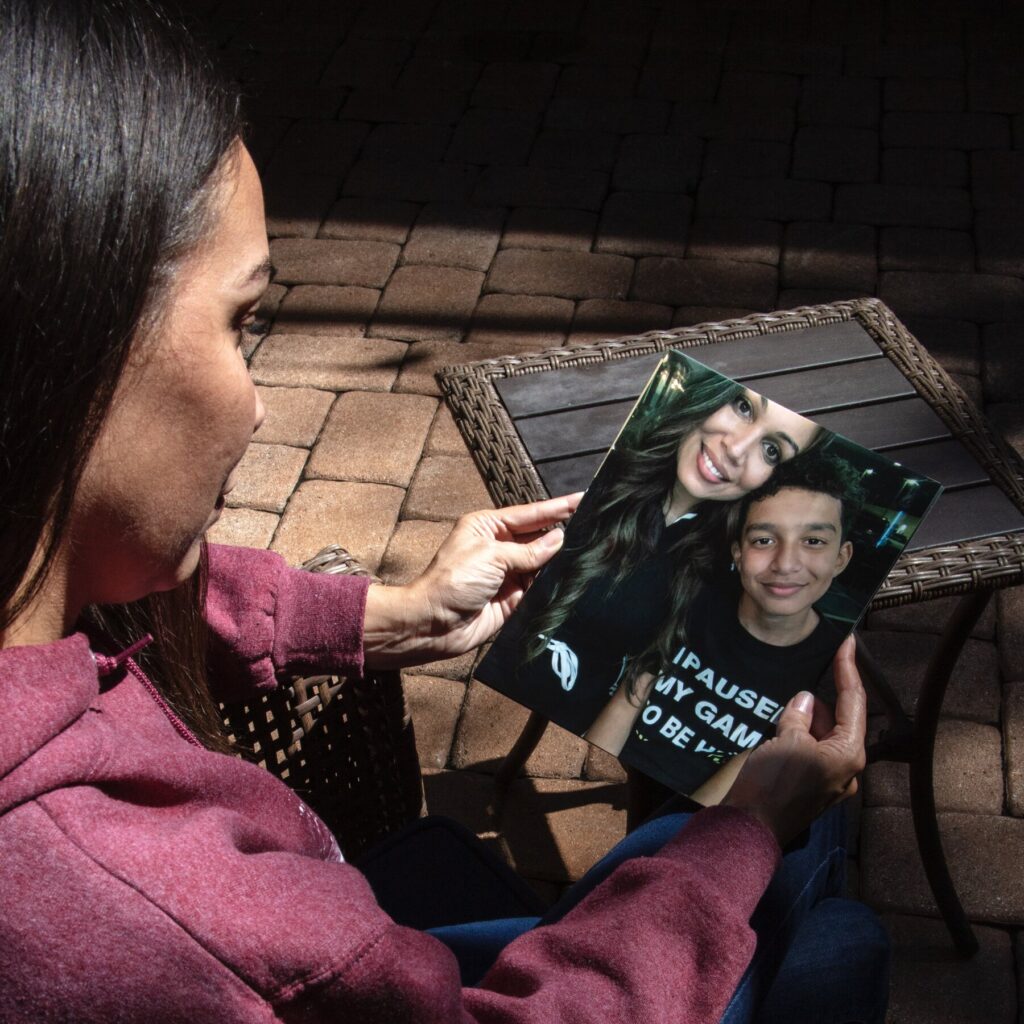

A mother in Florida filed a lawsuit against an A.I. start-up, alleging its product led to her son’s death. The company’s defense raises a thorny legal question.

Read the full article on NY Times Technology

Truth Analysis

Analysis Summary:

The article appears mostly accurate, focusing on the legal and ethical questions surrounding the Character.AI lawsuit. The bias leans slightly towards highlighting the potential dangers of AI and the need for accountability, but it presents the core facts of the case. Some claims are difficult to fully verify without more comprehensive source material.

Detailed Analysis:

- Claim: A mother in Florida filed a lawsuit against an A.I. start-up, alleging its product led to her son’s death.

- Verification Source #5: Confirms a lawsuit filed by a family claiming a Florida teen's suicide was linked to an AI chatbot.

- Verification Source #2: Implies the existence of the lawsuit and the questions it raises.

- Verification Source #4: Confirms the suicide of a local teen and the subsequent questions about AI accountability.

- Assessment: Supported

- Claim: The company’s defense raises a thorny legal question regarding free speech protections.

- Verification Source #1: Confirms the legal question regarding speech protection in the AI case.

- Verification Source #2: States that the company's defense involves free speech protections under the U.S. Constitution.

- Verification Source #3: Confirms that Character Technologies maintains that AI-generated speech is protected under the First Amendment.

- Assessment: Supported

Supporting Evidence/Contradictions:

- Source 5: Florida teen's suicide linked to AI chatbot, family lawsuit claims.

- Source 2: ... free speech protections under the U.S. Constitution should shield them from liability.